SYNETIC.ai

What You Get

Multi-Modal Sensor Data

Perfect Multi-Modal Annotations

Geospatial Intelligence

Complete Sensor Calibration

Multiple Format Support

See the full technical specification of folder structure, metadata files, GeoTIFFs, and multi-format support

Geospatially Accurate Data

Every image includes GeoTIFF and KML files with perfect coordinate accuracy. Direct import to GIS systems. Essential for autonomous navigation, defense applications, and geospatial AI. Most synthetic data companies can’t deliver this.

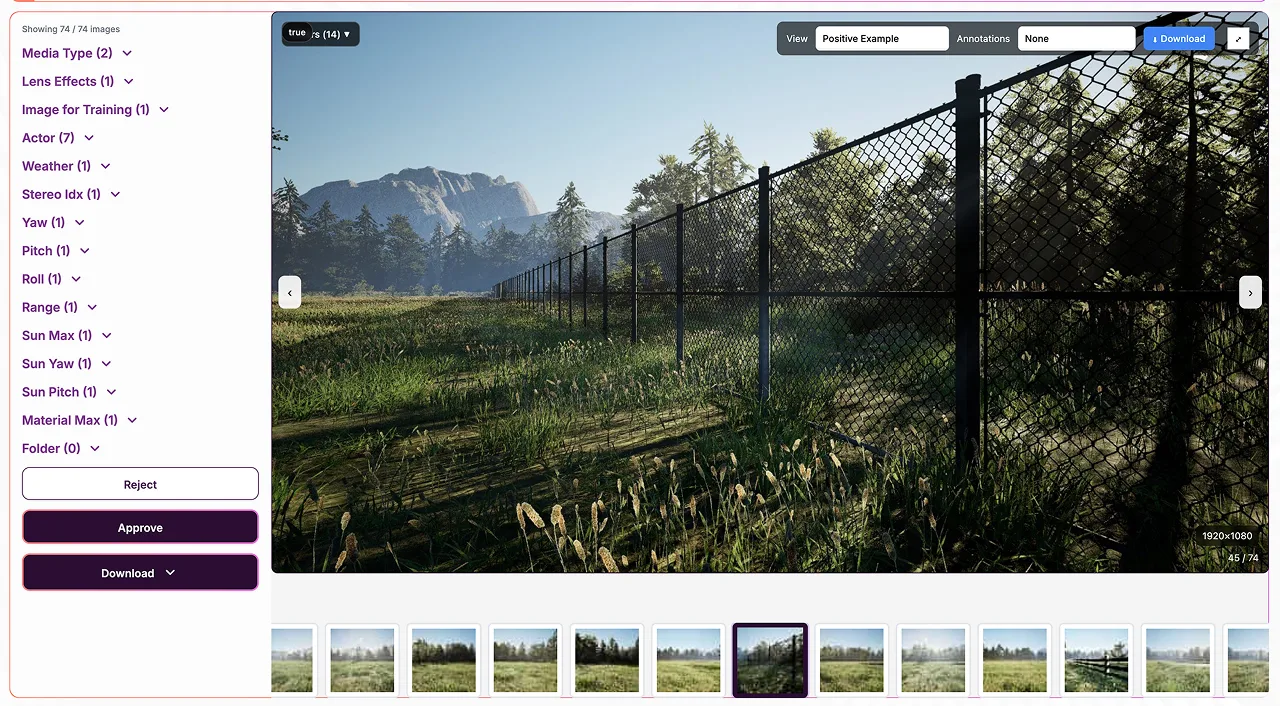

Review Every Asset Before Download

Interactive validation tool lets you inspect, verify, and selectively download every single asset.

Complete quality control at your fingertips.

Multi-Modal Visualization

Toggle between RGB, depth maps, thermal imaging, LiDAR point clouds, and radar returns. See exactly what you’re getting across all sensor modalities.

Annotation Overlay

Interactive annotation viewing with bounding boxes, segmentation masks, and keypoints. Verify Pixel perfect accuracy and zero labeling errors.

Geospatial Map View

View GeoTIFF and KML data overlaid on interactive maps. Verify coordinate accuracy and GIS integration readiness.

Metadata Inspector

Review complete camera intrinsics, extrinsics, sensor calibration data, timestamps, and technical specifications for every image.

Selective Download

Reject images you don’t want. Download individual assets or custom subsets. Complete control over your final dataset composition.

Quality Verification

Inspect edge cases, verify sensor alignment, check annotation precision. Ensure every asset meets your quality standards before integration.

Technical Specifications

Sensor Modalities

Multi-modal sensor simulation: RGB, depth, thermal (IR), LiDAR, radar. Perfect sensor fusion with synchronized timestamps and calibrated alignment. Supports any sensor configuration for autonomous systems, robotics, and defense.

Physics-Based Rendering

Photorealistic materials with accurate light transport simulation. Real-world camera response modeling. Physically accurate sensor characteristics. No domain gap between synthetic and real.

Dataset Size

From 10,000 to 500,000+ images. Size optimized based on your object complexity and variation requirements. Unlimited variations possible.

Annotation Precision

Pixel perfect accuracy guaranteed. Automatically generated from 3D scene, eliminating human labeling errors completely. Perfect consistency across millions of images.

Image Resolution

Any resolution from 640×640 to 8K+. Match your target deployment camera specifications exactly. Multiple resolutions in same dataset available.

Geospatial Precision

Every image includes GeoTIFF and KML files with accurate coordinate data. Direct import to GIS systems. Perfect for autonomous navigation, geospatial AI, and defense applications.

University-Verified Performance

Independent validation by University of South Carolina

34%

Better mAP than real-world data

7

Model architectures tested

100%

Tested on real-world validation

0

Human annotation errors

Our synthetic training datasets don’t just match real-world data—they outperform it. Peer-reviewed research proves models trained on pure synthetic data achieve superior generalization, better recall, and more robust performance across edge cases.

This isn’t theory. It’s measured, verified, and repeatable.

Built For Your Industry

Autonomous Vehicles

ADAS testing, sensor fusion validation, LiDAR + camera + radar integration, pedestrian detection, traffic analysis

Typical: Images with multi-modal sensors across all weather, lighting, and traffic scenarios

Robotics

Multi-modal perception, LiDAR SLAM, object manipulation, navigation, sensor fusion training

Typical: Images with full 360° coverage, depth, and multi-sensor data

Aerospace & Defense

Multi-spectral imaging, radar simulation, thermal detection, geospatial intelligence, target recognition

Typical: Images with LiDAR, radar, IR, and perfect geospatial accuracy

Manufacturing

Defect detection with thermal imaging, quality control, safety monitoring, assembly verification

Typical: Images covering 20+ defect types with multi-spectral data

Agriculture

Crop detection with multi-spectral, yield estimation, disease identification, precision agriculture

Typical: Images with thermal, RGB, and depth across all seasons

Construction

Safety monitoring with thermal, equipment tracking, progress monitoring, hazard detection

Typical: Images covering all job site conditions with depth and thermal

Fully Customizable To Your Needs

Sensors & Modalities

Objects & Scenarios

Environments

Annotations

Frequently Asked Questions

Do you support multi-modal sensor data?

Yes. We generate RGB, depth, thermal (IR), LiDAR, and radar data with perfect sensor alignment and synchronization. Essential for sensor fusion training in autonomous systems, robotics, and defense applications.

How accurate is the geospatial data?

Every image includes GeoTIFF and KML files with perfect coordinate accuracy. You can import directly into any GIS system (verified with Google Earth Pro). Essential for autonomous navigation, defense, and geospatial AI applications.

Will models trained on synthetic data work in the real world?

Yes. Our physics-based rendering ensures synthetic and real data are statistically similar. The 34% performance improvement was measured on real-world validation data, not synthetic tests. There’s no domain gap.

How quickly can you deliver?

Typically 1-2 weeks for datasets. Actual timeline depends on project complexity and start date. Compare that to 6-18 months for traditional real-world data collection with multi-modal sensors and geospatial accuracy.

Can you handle custom requirements?

Absolutely. We’ve built 150+ models across dozens of industries with custom sensor configurations, environments, and annotation types. If you can describe it, we can generate it.

Do I need to provide any real-world data?

No. We generate everything synthetically. You can add real data later if you want, but it’s not required (and the USC white paper shows pure synthetic actually performs better).

How does the validation tool work?

We deliver datasets in a structured folder hierarchy with separate directories for images (/datas), metadata (/metas), and annotations (/yolos). You’ll receive multiple annotation formats (YOLO, COCO JSON, Pascal VOC XML), complete camera calibration data, GeoTIFF/KML files for geospatial data, and comprehensive CSV/JSON metadata files. Everything is organized, documented, and ready to use. View the complete dataset documentation (PDF) →

Can I test the data before committing?

Yes. We’ll train a model on our synthetic data and test it against your existing real-world validation set, at our expense. You see the performance before making any purchase decision. If the results don’t exceed your expectations, you can walk away at no cost or iterate with us to cover missing edge cases.

What’s included in the guarantee?

If our synthetic-trained model doesn’t meet or exceed your expectations (or doesn’t outperform your existing real-world trained models), we refund 100%. We’re that confident in our data quality and the USC validation research backing it.

Ready to train on the world’s best data?

Join the validation challenge: 3 of 10 spots available at 50% off

Questions? Email sales@synetic.ai