SYNETIC.ai

The Only Computer Vision AI With A Performance Guarantee

Our synthetic training data is 34% more accurate than real-world datasets (university-verified). If it doesn’t outperform your current approach, get a full refund. Delivered in weeks, not months.

Trusted by defense contractors, manufacturers, and security companies

Traditional vs. Synetic: The Clear Winner

Same training goals, completely different results

Traditional Approach

Time to Deploy

6-18mo

Typical Accuracy

70-85%

Dataset Cost

$500k+

Label Accuracy

~90%

Synetic Approach

Time to Deploy

2 weeks

Typical Accuracy

90-99%

Dataset Cost

$25k

Label Accuracy

100%

10-40x Faster, 90% Cheaper, 34% More Accurate

Our synthetic training data is university-verified to outperform real-world datasets. If it doesn’t beat your current approach, get a full refund.

See The Platform In Action

From data generation to real-time deployment—here’s how it works

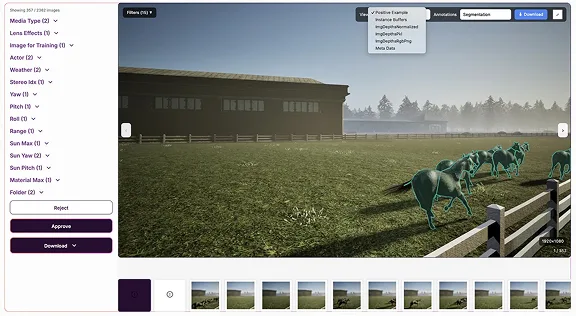

Generate Training Data

Control every parameter: lighting, weather, camera angles, actors, and more. Generate unlimited variations with perfect labels automatically.

Perfect Annotations

Every image includes pixel-perfect annotations: bounding boxes, segmentation masks, depth maps, keypoints, and complete camera metadata.

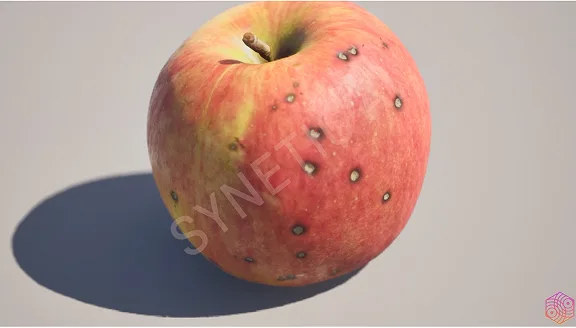

Photorealistic Quality

Physics-based rendering creates images indistinguishable from real-world data—no “domain gap” to overcome.

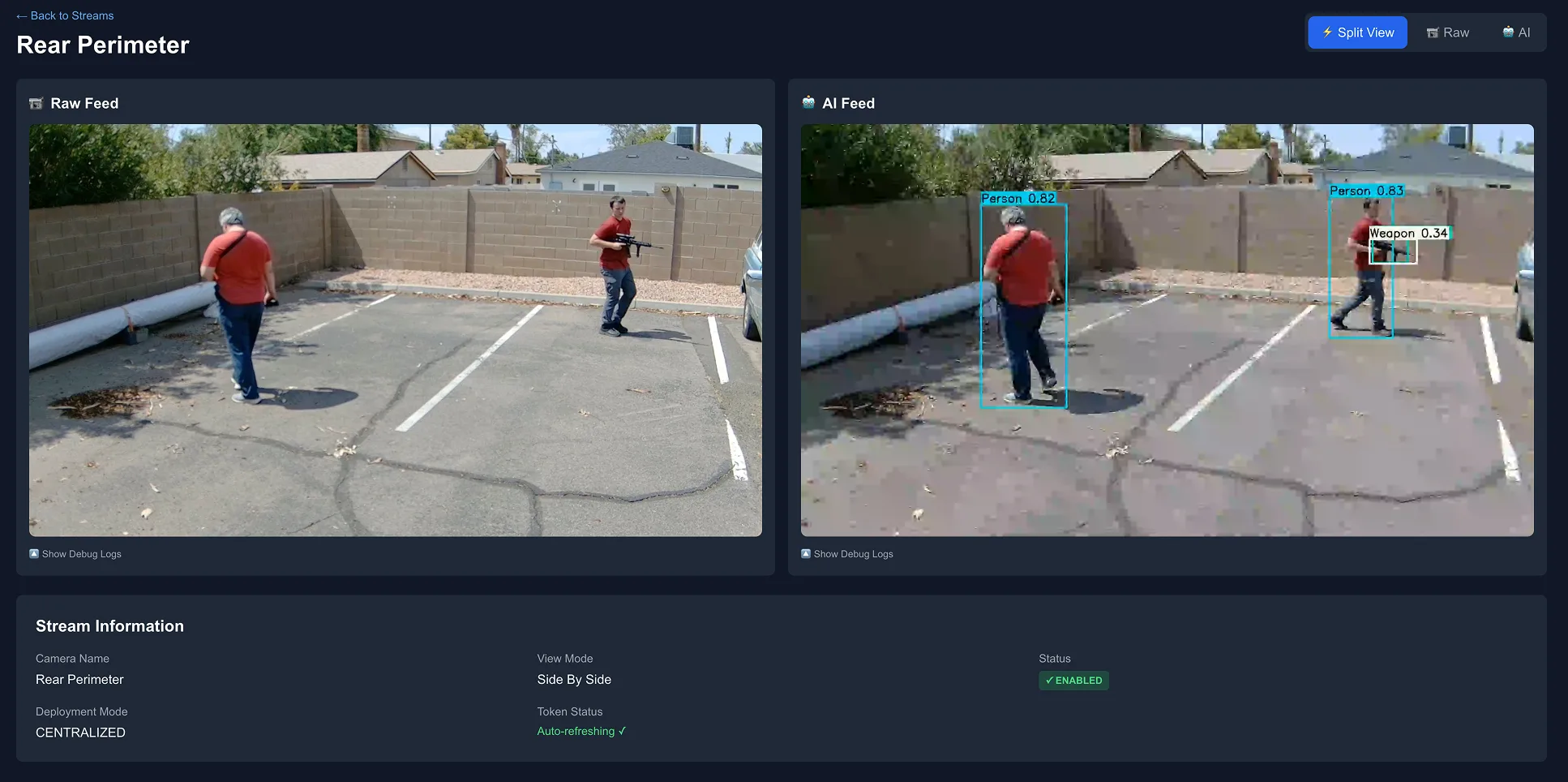

Deploy Anywhere

See your models running in real-time. Our platform handles edge deployment, cloud inference, and continuous monitoring.

Peer Reviewed Research

34% Better Performance. 100% Synthetic Data. University-Verified.

We didn’t just claim it works—we proved it. In partnership with the University of South Carolina, we trained models exclusively on synthetic data and tested them on real-world images.

The Results: Consistent Improvement Across All Architectures

| Model | Real-only mAP | Synetic mAP | Improvement |

|---|---|---|---|

| YOLOv12 | 0.240 | 0.322 | +34.24% |

| YOLOv11 | 0.260 | 0.344 | +32.09% |

| YOLOv8 | 0.243 | 0.290 | +19.37% |

| YOLOv5 | 0.261 | 0.313 | +20.02% |

| RT-DETR | 0.450 | 0.455 | +1.20% |

Seven different model architectures tested. All showed improvement with synthetic training data.

Validated on real-world test set. Full methodology peer-reviewed by USC researchers.

Download the Full Study

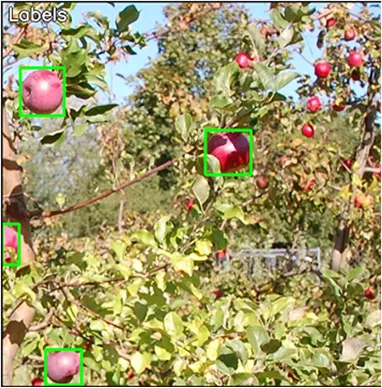

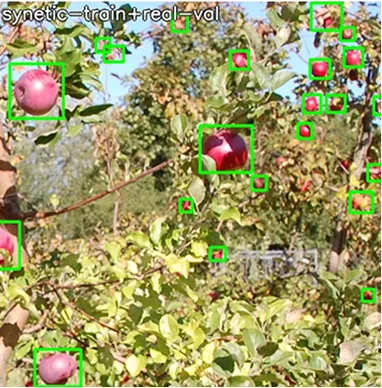

Synthetic Models Detect What Humans Miss

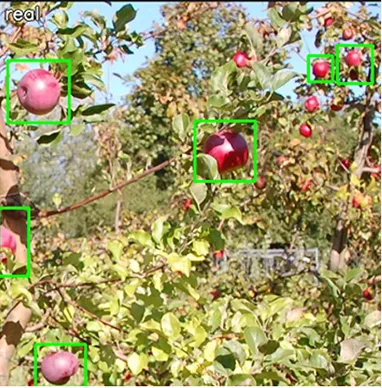

Ground Truth (Incomplete)

Human labels miss several apples

Real-Trained Model

Misses apples, limited detection

Detects all apples, including those missed by humans

Synetic-trained models (right) detected all apples, including those missed in the human-labeled “ground truth” (left). Real-trained models (center) missed multiple apples. What appears as false positives in our model are actually correct detections.

“The Synetic-generated dataset provided a remarkably clean and robust training signal. Our analysis confirmed the superior feature diversity of the synthetic data.”

Dr. Ramtin Zand & James Blake Seekings

University of South Carolina

Choose Your Solution

Get exactly what you need—from data to fully managed deployment

Synthetic Training Data

We generate it, you train it

Perfect for: ML teams, researchers, consultancies

$30,000

$15,000*

Validation Challenge pricing

Custom Trained Models

We build it, you deploy it

Perfect for: Companies with deployment capability

$50,000

$25,000*

Validation Challenge pricing

Enterprise Solution

Fully managed computer vision platform

Perfect for: Ongoing partnerships or custom deliverables

Custom

Validation Challenge pricing

*Typical prices. Prices may vary with requirements

Help us expand the evidence base for synthetic data superiority.

Get 50% off our services while building the future of computer vision together

What is This Program?

Our University of South Carolina white paper proved synthetic data outperforms real-world data by 34% in agricultural vision. Now we’re expanding that proof across industries.

We’re inviting 10 pioneering companies to deploy Synetic-trained computer vision systems at a significant discount, in exchange for allowing us to document your results as case studies.

Your success story becomes validation that synthetic data works across defense, manufacturing, autonomous systems, and beyond—not just agriculture.

Why Participate?

50% Discount

Get our full service offerings at half price during this validation period

Early Adopter Status

Be among the first companies to deploy proven synthetic-trained AI in your industry

Independent Validation

Your results contribute to peer-reviewed research validating synthetic data

Thought Leadership

Be featured as an innovation leader in published case studies and whitepapers

Proven Across Industries

See your models running in real-time. Our platform handles edge deployment, cloud inference, and continuous monitoring.

Manufacturing QC

Detect defects before production starts

Agriculture

Automate crop detection and yield estimation

Security

Identify threats and anomalies in real-time

Robotics

Train perception models entirely in simulation

Retail Analytics

Track inventory, customers, and behaviors

Logistics

Monitor safety, packages, and operations

Frequently Asked Questions

How can synthetic data be better than real? Real-world datasets are limited by what you can photograph and afford to label. Ours cover edge cases systematically, with perfect labels. The USC white paper proves it: +34% better performance across multiple architectures.

Will models trained on synthetic data work on my real cameras?

Yes. Our physics-based rendering ensures synthetic and real data are statistically similar. There’s no “domain gap”—the 34% improvement was measured on real-world validation data, not synthetic tests.

How long does it actually take?

1 week for datasets, 2 weeks for custom trained models, 1 week for deployment. Compare that to 6-18 months for traditional real-world data collection.

What if my use case is unique?

That’s exactly what we’re built for. Tell us what you need and we generate the training data custom for you. We’ve built 150+ models across dozens of industries. If you can describe it, we can generate it.

Do I need to provide my own data?

No. We generate everything synthetically. You can add real data later if you want, but it’s not required (and the white paper shows it actually hurts performance).

What’s included in the money-back guarantee?

If our synthetic-trained model doesn’t meet or exceed your expectations (or doesn’t outperform your existing real-world trained models), we refund 100%. We’re that confident.

Ready to build better models?

Join the validation challenge: 3 of 10 spots available at 50% off with 100% money-back guarantee

Questions? Email sales@synetic.ai or schedule a 15-min call