SYNETIC.ai

The Evidence: Synthetic Data Outperforms Real by 34 percent

University-validated. Peer-reviewed. Independently verified.

Not marketing claims—published science.

Authors: Synetic AI with Dr. Ramtin Zand & James Blake Seekings

(University of South Carolina)

Published on: ResearchGate | November 2025

Key Findings at a Glance

34%

Performance Improvement

Best-performing model (YOLOv12) achieved 34.24% better accuracy with synthetic data vs. real-world training data

7/7

Consistent Results

All seven tested model architectures showed improvement—proving this isn’t model-specific

100%

Synthetic Training

Models trained exclusively on synthetic data, tested on 100% real-world validation images

0

Domain Gap

Feature space analysis proves synthetic and real data are statistically indistinguishable

The Results: Consistent Improvement Across All Architectures

Every model improved. No exceptions. Tested on real-world validation data that models had never seen.

| Model | Real-only mAP | Synetic mAP | Improvement | |

|---|---|---|---|---|

| YOLOv12 | 0.240 | 0.322 | +34.24% | Best |

| YOLOv11 | 0.260 | 0.344 | +32.09% | Excellent |

| YOLOv8 | 0.243 | 0.290 | +19.37% | Strong |

| YOLOv5 | 0.261 | 0.313 | +20.02% | Strong |

| RT-DETR | 0.450 | 0.455 | +1.20% | Improved |

mAP50-95 measured on real-world validation set. Models trained for 100 epochs with identical hyperparameters. Full benchmark available on Github

Why These Results Matter

Consistency across architectures: From lightweight models (YOLOv5) to cutting-edge transformers (RT-DETR), improvement was universal. This proves the advantage comes from data quality, not model selection.

Tested on real-world data: The validation set was 100% real-world images captured in actual orchards. These weren’t synthetic test images—they were photographs our models had never seen during training.

Statistically significant: The improvements are far beyond margin of error, representing genuine performance gains validated through rigorous testing protocols.

Research Methodology

This research was conducted by the University of South Carolina Department of Computer Science and Engineering in October 2025. The study compared seven state-of-the-art object detection architectures (YOLOv5, YOLOv8, YOLOv11, YOLOv12, YOLOv6, YOLOv3, and RT-DETR) trained on two datasets:

Testing Protocol

All models were trained for 100 epochs using identical hyperparameters and tested on the same real-world validation set that neither training set included. Performance was measured using mean Average Precision at IoU thresholds 0.50-0.95 (mAP50-95).

Key finding:

Synthetic-trained models achieved 1.20% to 34.24% higher accuracy than real-world trained models across all seven architectures.

Key Research Findings

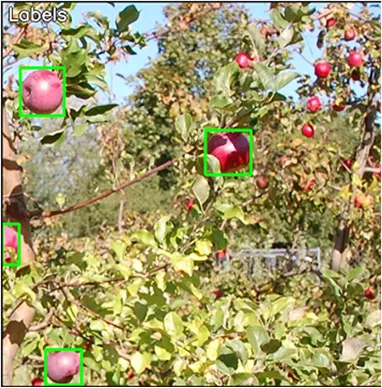

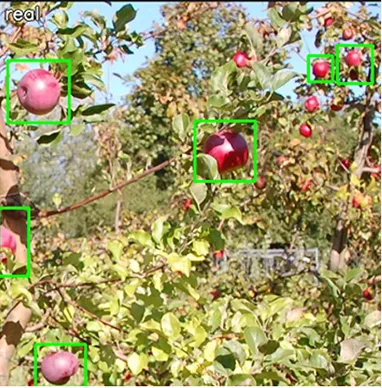

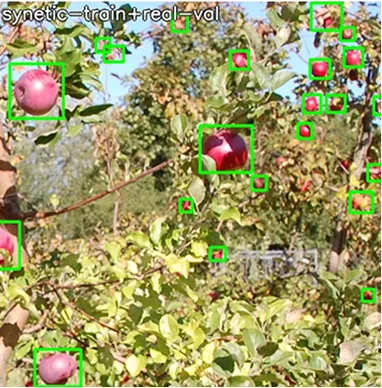

Visual Proof: Synthetic Models Detect What Humans Miss

Our synthetic-trained models didn’t just match human performance—they exceeded it, detecting objects that human labelers overlooked.

Incomplete

Ground Truth (Human Labelers)

Human labelers missed several apples in the scene. This is typical—human labeling accuracy averages ~90% due to fatigue, oversight, and occlusion challenges.

Limited Detection

Real-World Trained Model

Model trained on real-world data with human labels. It learned from incomplete ground truth, limiting its detection capability.

Complete Detection

Synetic-Trained Model

Trained exclusively on synthetic data with perfect labels. Detected all apples in the scene, including those missed by human labelers.

Synetic-trained models (right) detected all apples, including those missed in the human-labeled “ground truth” (left). Real-trained models (center) missed multiple apples. What appears as false positives in our model are actually correct detections.

“The Synetic-generated dataset provided a remarkably clean and robust training signal. Our analysis confirmed the superior feature diversity of the synthetic data.”

Dr. Ramtin Zand & James Blake Seekings

University of South Carolina

Access the Complete Research

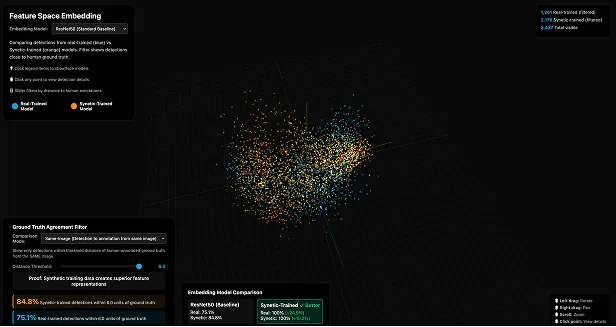

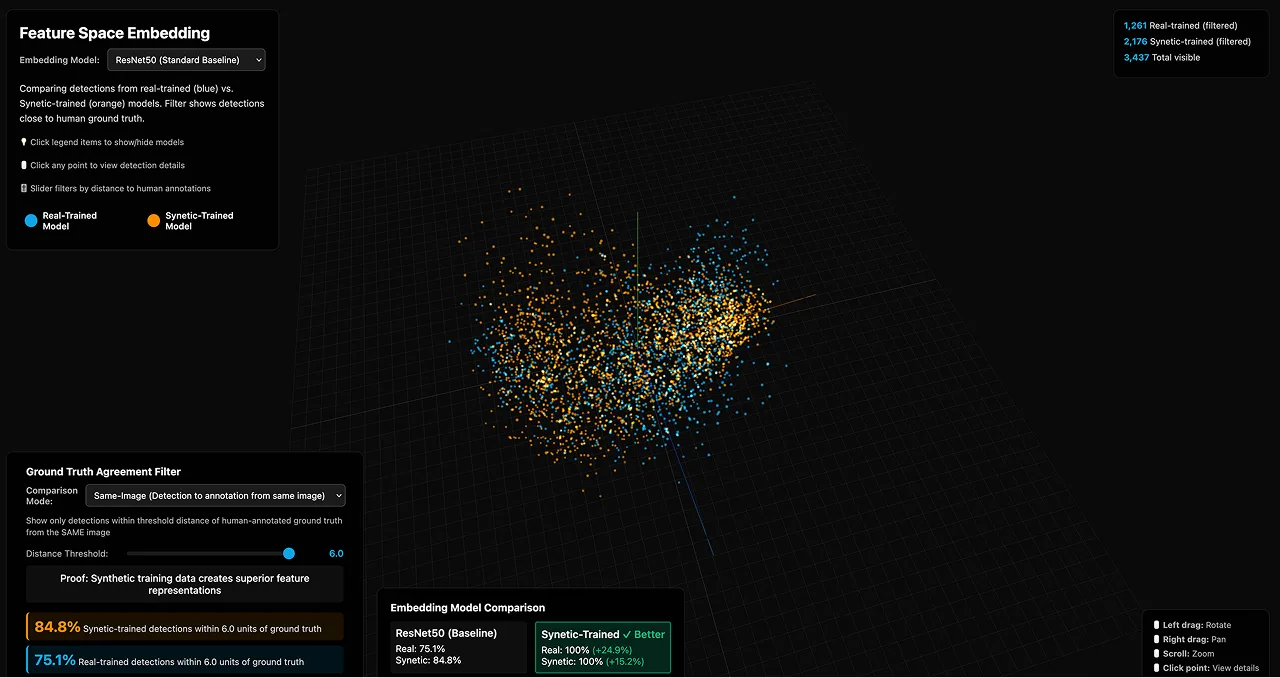

Scientific Proof: No Domain Gap Exists

We prove they do by analyzing the feature space where neural networks actually learn.

Technical Details

Research Methodology

Why Synthetic Data Outperforms Real-World Data

Perfect Label Accuracy

Superior Data Diversity

Systematic Edge Case Coverage

Physics-Based Accuracy

| What You Get | Real-World Approach | Synetic Approach |

|---|---|---|

| Time to deployment | 6-18 months | 2-4 weeks |

| Model accuracy | 70-85% | 90-99% (+34%) |

| Label quality | ~90% accurate | 100% perfect |

| Edge case coverage | Limited by collection | Unlimited & systematic |

| Edge case coverage | Collection-limited | Unlimited generation |

| Iteration speed | Months per change | Days per change |

Addressing Common Concerns

The proof: Feature space analysis shows complete overlap between synthetic and real images. If they weren’t realistic, they’d cluster separately. They don’t.

The proof: PCA/TSNE/UMAP analysis of embeddings proves synthetic and real data occupy identical feature space. If domain gap existed, performance would decrease on real data. Instead, it increased by 34%.

Extreme lighting (very dark, very bright, backlighting)

Heavy occlusion scenarios

Unusual angles and perspectives

Rare weather conditions

Objects at detection boundaries

The proof: Our models detected apples that human labelers missed—edge cases where objects were heavily occluded or at challenging angles.

We’ve successfully deployed synthetic data training across:

Defense: Threat detection, surveillance, perimeter security

Manufacturing: Defect detection, assembly verification, QC

Security: Anomaly detection, intrusion detection

Robotics: Navigation, manipulation, object recognition

Logistics: Package tracking, safety monitoring

The proof: We’re actively seeking 10 companies across different industries for validation challenge case studies. Join the program to expand the evidence base.

Additionally, join our Validation Challenge program at 50% off. We’ll work with you to prove it works for your specific application, and you’ll contribute to expanding the evidence base.

Study Scope and Future Validation

While results are promising, we’re expanding validation across additional domains including:

Join the Validation Challenge

Help us expand the evidence base for synthetic data superiority across industries.

Get 50% off our services while building the future of computer vision together.

100% Money Back Guarantee

What is This Program?

Our University of South Carolina white paper proved synthetic data outperforms real-world data by 34% in agricultural vision. Now we’re expanding that proof across industries.

We’re inviting 10 pioneering companies to deploy Synetic-trained computer vision systems at a significant discount, in exchange for allowing us to document your results as case studies.

Your success story becomes validation that synthetic data works across defense, manufacturing, autonomous systems, and beyond—not just agriculture.

What is This Program?

50% Discount

Get our full service offerings at half price during this validation period

Early Adopter Status

Be among the first companies to deploy proven synthetic-trained AI in your industry

Independent Validation

Your results contribute to peer-reviewed research validating synthetic data

Thought Leadership

Be featured as an innovation leader in published case studies and whitepapers

Download the Complete Evidence Package

Research Team

Ready to build better models?

Join the validation challenge: 3 of 10 spots available at 50% off with 100% money-back guarantee

Questions? Email sales@synetic.ai or schedule a 15-min call